When the Model Can't Be Fixed

Protecting play in a computer vision pipeline.

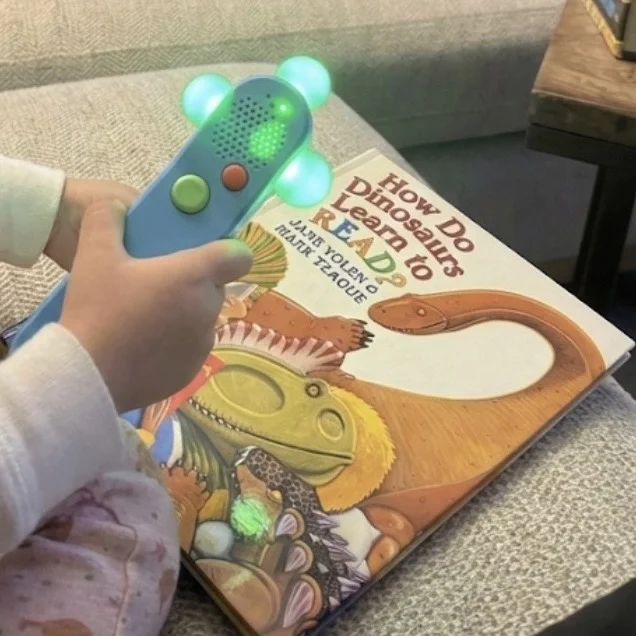

Arti points her wand at a picture of a dinosaur. It roars. Magic.

Kibeam Learning's wand in action.

At Kibeam Learning, I worked at the seam between Creative and Engineering. Computer Vision (CV) models powered Kibeam's screenless, interactive experiences. When everything works, it feels like magic. When it breaks... the magic stops.

Inference can fail for many reasons.

This case study covers three CV issues from How Do Dinosaurs Learn to Read?, each requiring a different level of fix. The third taught me when to stop debugging and start designing around the failure.

Ticket #DINO-80 Know Where to Look

Arti points at the tiny book in the dino's claws and waits. Silence.

QA flagged an inference issue. The model wasn't detecting the r.book

target. Earlier at Kibeam, my first instinct would have been to dive into the logs and verify whether

the CV model could see the object at all. But experience taught me to check the script first.

Without a component, r.book's response would

never trigger.

Sure enough, a writer missed adding an entry for r.book's hover

component. This instruction tells the system what to do when the model detects a target. The model was

seeing just fine. The system just didn't know how to respond.

I added the component, pushed a new build, tested locally, and...

"ROAAAAAAR."

Ticket #DINO-84 Acceptable Trade-offs

Arti points at words, listening carefully as they read aloud. Suddenly, the wand announces, "New page!" But I didn't turn it yet? The book just stopped making sense.

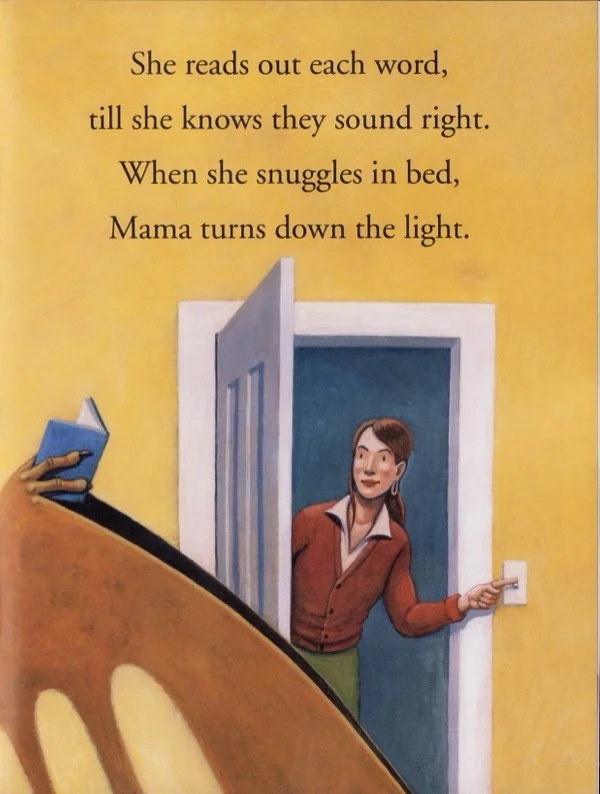

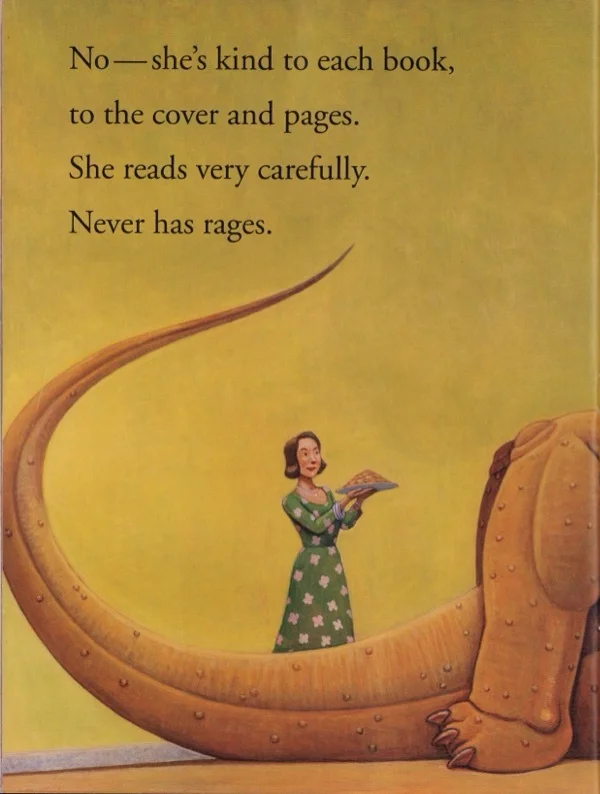

QA flagged "ejections" occurring when pointing at the text on page 21. I narrowed it down to the area around "She reads," which appears on both pages. The model couldn't tell those spots apart.

Page 21

Page 21

Page 18

Page 18

"She reads..." appears on both pages and the model couldn't tell them apart.

I started with the least invasive fix, adjusting the FOV (field of view). This parameter controls how much of the page the model sees. More context should help it distinguish between pages. I bumped up the value in small increments. No improvement. At FOV 37, I started seeing false triggers elsewhere. Ugh, new problems in exchange for no solution.

Next tool: relabeling and retraining. I added specific labels to each text region and retrained the model. This approach had worked on a different book. But on this one, it didn't. The model still ejected the user from page 21 to 18.

I escalated to our ML engineer. He suggested trying a different training recipe. Still no fix.

At this point, I'd exhausted technical options that would give us a "perfect" fix. So I shifted to negotiation.

I proposed an exclusion rule to our producer. This meant that when the user is on page 21, the system ignores page 18's text targets entirely. No more ejections, but there's a trade-off. Users can't navigate directly from page 21 to page 18 by pointing at page 18's text. However, they could point at any other element on the page.

We agreed that a minor navigation constraint was better than a disorienting failure. I'd tried everything else first. That made it easy to say yes.

The fix shipped.

Ticket #DINO-101 The Wall

Holding the wand, Arti's hand drifts juuust off the edge of the page. Nothing should happen. Instead, the wand launches into a mini-game.

here launches dino_head game?!

The model was supremely confident, but supremely wrong.

It falsely

detected

an object on the other side of the book.

QA filed a tricky inference ticket days before our ship date. Pointing off-book near the bottom of page 21 triggered the model to detect the dinosaur head on page 20... way on the opposite side of the book. I ran through my complete diagnostic toolkit: FOV adjustments, confidence thresholds, relabeling, and retraining. Nada. Nothing worked.

I escalated to our ML engineer. He attempted additional training, came back, and shook his head, "Unfortunately, it behaves about the same."

With our deadline approaching, I brought the problem to my Creative Director. He offered a reframe I hadn't considered: "What if we move the game trigger off the dino head completely? Assign it to the book in the dino's claws instead. Then we put a short squawk sound effect on the dino head."

Move the high-stakes interaction to the stable target. Backfill with something harmless.

I immediately clocked the elegance. If the model misfires and detects the dino head when a child points off-book, they hear a goofy "squawk." Unexpected, but harmless versus a catastrophic two-minute game they didn't ask for.

We'd already launched games off dinos' books elsewhere in the title, so the pattern fit. I built the change that afternoon. Tested it. Smiled.

We shipped, and the book went live on time. Still a model failure. But now, graceful.

"When I filed computer vision tickets, Choksi would come back with a full breakdown of what he'd tried before proposing a workaround. I always knew he'd exhausted all options, and he communicated every adjustment throughout the process."Alyssa

QA Engineer, Kibeam Learning